Hypertable on HDFS(hadoop) 安装,安装指南过程4.2.Hypertable on HDFS创建工作目录$ hadoop fs -mkdir /hypertable$ hadoop fs -chmod 777 。

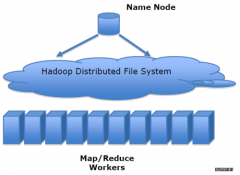

Hadoop - HDFS 安装指南

过程 4.2. Hypertable on HDFS

-

创建工作目录

$ hadoop fs -mkdir /hypertable $ hadoop fs -chmod 777 /hypertable

-

安装 Java 运行环境

yum install java-1.7.0-openjdk yum localinstall http://ftp.cuhk.edu.hk/pub/packages/apache.org/hadoop/common/hadoop-1.1.2/hadoop-1.1.2-1.x86_64.rpm

-

修改 jrun bug

cp /opt/hypertable/current/bin/jrun /opt/hypertable/current/bin/jrun.old vim /opt/hypertable/current/bin/jrun #HT_JAR=`ls -1 /opt/hypertable/doug/current/lib/java/*.jar | grep "hypertable-[^-]*.jar" | awk 'BEGIN {FS="/"} {print $NF}'` HT_JAR=`ls -1 /opt/hypertable/current/lib/java/*.jar | grep "hypertable-[^-]*.jar" | awk 'BEGIN {FS="/"} {print $NF}'`export JAVA_HOME=/usr export HADOOP_HOME=/usr export HYPERTABLE_HOME=/opt/hypertable/current

-

hypertable.cfg

# cat conf/hypertable.cfg # # hypertable.cfg # # HDFS Broker #HdfsBroker.Hadoop.ConfDir=/etc/hadoop/conf HdfsBroker.Hadoop.ConfDir=/etc/hadoop # Ceph Broker CephBroker.MonAddr=192.168.6.25:6789 # Local Broker DfsBroker.Local.Root=fs/local # DFS Broker - for clients DfsBroker.Port=38030 # Hyperspace Hyperspace.Replica.Host=localhost Hyperspace.Replica.Port=38040 Hyperspace.Replica.Dir=hyperspace # Hypertable.Master #Hypertable.Master.Host=localhost Hypertable.Master.Port=38050 # Hypertable.RangeServer Hypertable.RangeServer.Port=38060 Hyperspace.KeepAlive.Interval=30000 Hyperspace.Lease.Interval=1000000 Hyperspace.GracePeriod=200000 # ThriftBroker ThriftBroker.Port=38080

/etc/hadoop/hdfs-site.xml

# cat /etc/hadoop/hdfs-site.xml <?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="configuration.xsl"?> <!-- Put site-specific property overrides in this file. --> <configuration> <property> <name>dfs.name.dir</name> <value>/var/hadoop/name1</value> <description> </description> </property> <property> <name>dfs.data.dir</name> <value>/var/hadoop/hdfs/data1</value> <description> </description> </property> <property> <name>dfs.replication</name> <value>2</value> </property> </configuration> -

启动 dfsbroker

# /opt/hypertable/current/bin/set-hadoop-distro.sh cdh4 Hypertable successfully configured for Hadoop cdh4

# /opt/hypertable/current/bin/start-dfsbroker.sh hadoop DFS broker: available file descriptors: 1024 Started DFS Broker (hadoop)

查看启动日志

# tail -f /opt/hypertable/current/log/DfsBroker.hadoop.log log4j:WARN No appenders could be found for logger (org.apache.hadoop.conf.Configuration). log4j:WARN Please initialize the log4j system properly. HdfsBroker.dfs.client.read.shortcircuit=false HdfsBroker.dfs.replication=2 HdfsBroker.Server.fs.default.name=hdfs://namenode.example.com:9000 Apr 23, 2013 6:43:18 PM org.hypertable.AsyncComm.IOHandler DeliverEvent INFO: [/192.168.6.25:53556 ; Tue Apr 23 18:43:18 HKT 2013] Connection Established Apr 23, 2013 6:43:18 PM org.hypertable.DfsBroker.hadoop.ConnectionHandler handle INFO: [/192.168.6.25:53556 ; Tue Apr 23 18:43:18 HKT 2013] Disconnect - COMM broken connection : Closing all open handles from /192.168.6.25:53556 Closed 0 input streams and 0 output streams for client connection /192.168.6.25:53556

声明: 此文观点不代表本站立场;转载须要保留原文链接;版权疑问请联系我们。